Wearable tech lets users operate robots using everyday gestures, even on the move

Introduction

Imagine controlling a machine with a simple wave of your hand—while you’re jogging on a trail or riding in a car. A new, next-generation wearable device makes this possible by translating everyday gestures into commands for machines and robots, even while the user is in motion.

Engineered at the University of California San Diego, this innovative system accurately interprets a user’s gestures during activities like running, driving, or even being on a boat at sea.

Achieving reliable gesture recognition in real-world, dynamic settings has been a persistent challenge for wearable technology. The issue lies in the sensors themselves; when users move too much, the resulting “motion noise” overwhelms the subtle signals meant for gesture control.

To tackle this problem and create a more practical piece of wearable tech, the UC San Diego team turned to a powerful combination: soft, stretchable electronics and artificial intelligence.

“By integrating AI to clean noisy sensor data in real time, the technology enables everyday gestures to reliably control machines even in highly dynamic environments,” explained Xiangjun Chen, a postdoctoral researcher at the UC San Diego Jacobs School of Engineering and co-first author of the study.

Built on Earth, Proven on Mars

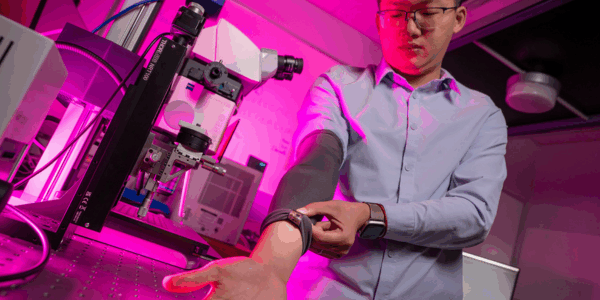

The device itself is an integrated system built into a compact, multi-layered design. It features a soft electronic patch attached to a cloth armband, which houses motion and muscle sensors, a Bluetooth microcontroller, and a stretchable battery.

To ensure robustness, the system was trained on a comprehensive dataset that included real gestures performed under various challenging conditions, from the jostling of a run to the rhythmic sway of ocean waves.

In practice, signals from the wearer’s arm are captured and immediately processed by a custom deep-learning framework. This AI acts as a smart filter, stripping away interference to interpret the intended gesture and then transmitting a command to a machine—like a robotic arm—in real time.

“This advancement brings us closer to intuitive and robust human-machine interfaces that can be deployed in daily life,” Chen added.

The wearable was put through rigorous testing in multiple high-motion scenarios. It successfully controlled a robotic arm while the user was running, while subjected to strong vibrations, and under a mix of other disturbances. Its performance was further validated in an ocean simulator that replicated both lab-generated and real sea motions. In every test, the system maintained accurate and low-latency performance.

Conclusion

The potential applications for this technology are vast. For instance, patients in rehabilitation or those with limited mobility could use natural gestures to operate robotic aids without needing precise fine motor skills. Industrial workers and first responders could benefit from hands-free control of tools and robots in hazardous or high-motion environments. The technology could also enable divers to command underwater robots with simple gestures.

“This work establishes a new method for noise tolerance in wearable sensors,” Chen said. “It paves the way for next-generation wearable systems that are not only stretchable and wireless, but also capable of learning from complex environments and individual users.”

The research, titled ‘A noise-tolerant human-machine interface based on deep learning-enhanced wearable sensors’, has been published in the prestigious journal Nature Sensors.

Copyright 2025 The Institution of Engineering and Technology. All Rights Reserved.

Contact us via email for more information.